Can a New Algorithm Make Self-Driving Cars Safer?

Buffaloboy/Shutterstock

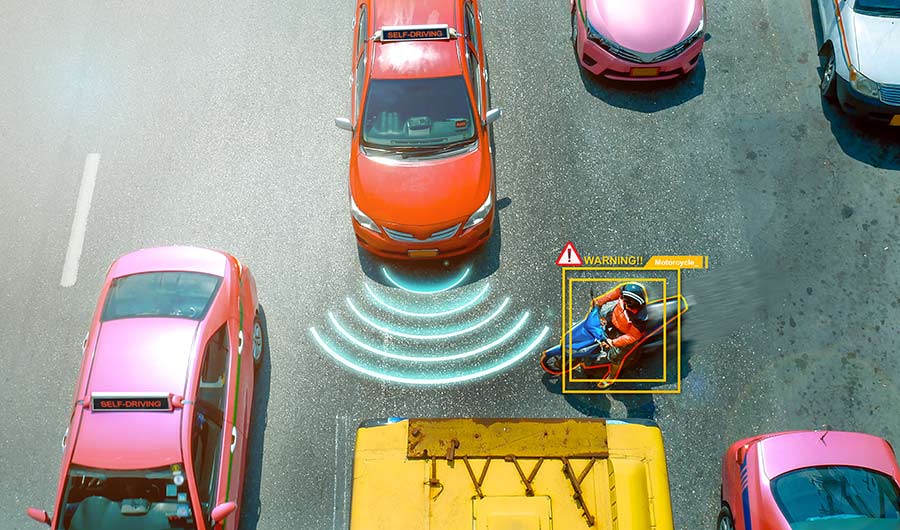

(Inside Science) -- A driverless car isn't driven by a person but is controlled by a system of sensors and processors. In many countries, tests of autonomous driving have been happening for years. Germany wants to permit driverless cars across the country by 2022. As the technology develops, researchers are continuing to explore ways to make the algorithms used to make driving decisions better, and roadways safer.

A team of three doctoral students at the Technical University in Munich published details of their approach today in the journal Nature Machine Intelligence.

They employ a theoretical computer science technique known as formal verification, says Christian Pek, the study’s lead author. “With these kind of techniques you can ensure properties of the system, and in this case we can ensure that our vehicle doesn’t cause any accidents.”

The paper shows for the first time that this approach works in arbitrary traffic scenarios, Pek said, as well as in three different urban scenarios where accidents most often occur: turning left at an intersection, changing lanes and avoiding pedestrians. “Our results show that our technique has the potential to drastically reduce accidents caused by autonomous vehicles,” he said.

Whether the algorithm represents a substantial improvement over current techniques, which are based on accepting an inherent amount of collision risk, would have to be proved in tests. Other researchers believe that depending on algorithms as the primary source of improvement may overlook the opportunity for human drivers to collaborate with artificial intelligence.

More driverless cars stories from Inside Science

Caution: Self-Driving Cars Ahead

The Moral Dilemmas of Self-Driving Cars

When Driverless Cars Crash, Who Pays?

It works by predicting all potential behaviors in a driving scenario, said study author Stephanie Manzinger. “We do not consider only a single future behavior, like a car continuing at its speed and direction, but instead consider all the actions that are physically possible and legal under traffic rules,” she explained. The algorithm then plans a range of fallback measures to ensure it doesn’t cause any harm.

Driverless cars have the ability to use advanced sensors to compute thousands of possible scenarios, and choose the safest course of action, said Pek -- something people can’t necessarily do in the moment of decision. “But most methods are not able to predict what could be in the future, but our method could predict all potential future evolutions of the scenario, given the traffic participants perform legal behaviors.”

One challenge is that the algorithm assumes the car is able to see the road, any obstacles or other drivers, like people or bicycles. It also assumes that other cars on the road follow physical and legal constraints like not speeding too much. They also tested the algorithm in urban situations, not in rural or high-risk environments.

While research in this area of vehicle safety is crucial, a better algorithm may not be the answer to autonomous driving concerns, says Bryan Reimer, a research scientist in the MIT Center for Transportation and Logistics.

“Society hasn’t answered, how safe is safe enough?” he said. The premise in many academic papers is that driverless cars can be adopted once they can be trusted to drive more safely than humans do. But Reimer says that doesn’t go far enough. “We are not ready for robotic error to harm people,” he said. It’s important to define what is appropriately safe. Different countries are still trying to wrestle legal standards to fit a future driverless world.

Robotic error will differ from human error. They're not going to fall asleep or get distracted when a text message pings. But they will err in other ways, for example mistaking a blowing piece of trash for a person. “Machine intelligence is really good at black-and-white decisions and getting better at others, while humans are adept at making decisions in gray areas,” said Reimer, who gave a TedX talk called “There’s More to the Safety of Driverless Cars than AI.”

“We need to be thinking less algorithmically,” said Reimer. He points to the aviation industry as an example: Decades ago, there were plans to automate the pilot out of the cockpit, but the industry soon discovered that wasn’t the best plan. Instead, they aimed to couple human expertise with automation. “In airplanes, people work with automation and leverage it and take on new responsibilities,” Reimer explained. “That’s what has driven aviation safety to where we are today.”

So how safe is safe enough? Reimer says that it’s about creating a culture of safety. To start, anything that is shown to be substantively safer, even a 5%-10% improvement, would be a starting point, but is not going to be acceptable in the long haul. Instead of a safety standard, the goal should be a continual process and improvement -- something akin to the way the FDA certifies new drug therapies or medical devices. “Anything that is safe enough today is not safe enough tomorrow,” he said.

The study authors Pek and Manzinger plan to further advance their technique by helping find a standard of operation and to get their algorithm out of a computer model and into a production vehicle. “It’s one step closer to bringing this to reality,” said Manzinger.