When Scientists Find Nothing: The Value of Null Results

Yuen Yiu

American Institute of Physics

(Inside Science) -- How long would it have taken Edison to invent the lightbulb if he and his team of workers hadn’t keep track of all the failures? From platinum filaments to animal hair, his team built a library of thousands of materials before patenting carbonized bamboo as the best material. Decades more would pass before Hungarian Sándor Just and Croatian Franjo Hanaman identified tungsten, the type of filament still used in incandescent lightbulbs today, as an even better material.

In science, these “failures” are sometimes known by their less disparaging name -- null results. They are an integral part of the exploratory nature of research. Finding a way to share this seemingly boring information can save scientists from repeating each other’s mistakes.

Some of the most significant physics discoveries in the past decade, notably the detections of the Higgs boson by the Large Hadron Collider and gravitational waves by the LIGO collaboration, happened only after decades of null results helped fine-tune the experimental efforts that eventually made the discoveries.

These advances netted the Nobel Prize in physics in 2013 and 2017, respectively. But that doesn’t mean physicists have it all figured out. The LHC itself produces so much data that researchers can’t even record it all. And some research groups may struggle to find the resources or incentive to share less noteworthy null results.

For physicists to navigate through the wrong turns and dead ends that are an inevitable part of their jobs, they must weigh scientific considerations against practical concerns such as time, money and credit. It’s a complicated equation that researchers are still working to balance.

Congratulations! You’ve found nothing

“Over the last 100 years, the nature of scientific communication has not changed,” said Krishna Ratakonda, a computer scientist at IBM. “You do some data gathering and fieldwork, then you do some analysis and hypothesis and statistical testing, etc. Finally, you present some thesis and publish that as a paper.”

The scientific method as we know it is centered around the idea of coming up with a hypothesis, then gathering and using data to prove or disprove it, and rinse and repeat. Elegant on paper due to its simplicity, the process is complicated by the fact that null results often don’t get published, which may doom different research efforts into gathering the same data over and over again.

After all, if you tested beard hair and found that it makes a terrible lightbulb filament -- as Edison did -- and decided to write a paper about it, it is unlikely to be accepted for publication in a peer-reviewed journal, and probably for good reasons.

One reason that null results often don’t get shared is that, compared to positive results, their importance is hard to intuitively judge. For example, imagine you found a material that can superconduct at a record high temperature -- clearly important. But if you tested a material thinking it might be superconducting but it turned out to be quite ordinary, it’s hard to know how important that information is.

Epistemologists -- philosophers who think about the concept of knowledge -- have a thought experiment that illustrates the logical framework necessary for assessing the importance of negative results, and it has a cool name.

The raven paradox

Let’s say the main goal of your research is to prove the following hypothesis: All ravens are black. Logic would allow you to frame your hypothesis alternatively: No nonblack item can be a raven.

So, the paradox is: Can you look at an apple, and seeing that it isn’t black and isn’t a raven, publish that as evidence for your hypothesis? That seems preposterous.

Epistemologists would argue that it depends on the context of the research. Since the amount of confirmation provided by the red apple is negligibly small, it has practically no value. But if you can gather all the nonblack objects in the world and show that none of them is a raven, then you would have a case for your paper. This statistics-based perspective to the raven paradox is known as the Bayesian solution, named after the 18th century English statistician Thomas Baye.

“The raven paradox is something that arises in the relation between evidence and hypotheses,” said Roger Clarke, a philosopher from Queen’s University in Belfast, Northern Ireland. “The Bayesian approach is to ask, to what degree does evidence confirm a hypothesis? In other words, if the evidence raises the probability of that hypothesis to be true.”

Consider this scenario. In front of you sit two boxes, and you know one of them contains a raven; your goal is to find out which. Your goal is to find out which one contains the raven. You open the box on the left and see it’s empty -- a null result by technicality. However, the result is just as valuable to you as if you had opened the box on the right, finding the raven and achieving a positive result, because either way, you now know which box contains the raven.

Now consider a different premise where your goal is the same, but there are a million boxes and still only one of them contains a raven. The discovery of an empty box is suddenly much less informative than if you have opened the box with the raven -- your null result is now practically worthless compared to a positive result, i.e., actually finding the raven.

In practice, this statistical perspective of the raven paradox can be used to weigh the importance of a null result -- how far does the data push the envelope compared to what was already known?

“If you figured out a way to do an experiment that would improve the sensitivity by a factor of a thousand -- even if you don’t see anything -- that’s a null result, but it would still be a big discovery,” said Peter Meyers, a particle physicist and astrophysicist from Princeton University.

For example, if a theory predicted at least one treasure under every 1,000 rocks in a specific area, and you figured out a way to turn 2,000 rocks and still found nothing -- your results are null, but still valuable since it puts a limit on how likely the theory can be true.

“When you look for things and can’t find them, you publish a limit. And there's nothing thought odd about publishing that at all,” said Meyers. “It can help rule out some theories that people used to believe and guide future theories.”

In a perfect world, scientists would get paid to look under every rock and pebble and keep track of everything they have or have not found. In reality, it is difficult to know the significance of a result before performing the experiment -- experiments that cost money.

Money, money, money

“As science progresses, experiments tend to get more expensive,” Meyers said. “It's hard to justify, say, a 10 trillion-dollar experiment and say, ‘We’re just going to explore, maybe we won't find anything.’ It's hard to look somebody in the eye and say that. Whereas if it was for, say, a thousand dollars, you can.”

The difference in material costs and potential payoff for different experiments makes some of them more of a gamble than others.

“It's very rare that a big telescope is built, and you come back and say, ‘Turned out there was nothing to see.’ That doesn't happen with telescopes, but with a particle accelerator, it could happen,” said Meyers.

This wager is center to the debate surrounding Europe and China’s decision of whether to build the world’s next generation particle collider.

What gets saved and what gets trashed

The LHC is one expensive instrument that did get the green light to explore the unknown, but for the machine to even function, researchers must quickly sieve through the data and discard more than 99% of it. The particle collisions produce roughly 600 terabytes worth of information every second -- most of which is expected to be empty boxes with no ravens.

Scientists and engineers use a combination of hardware and software solutions to whittle this ocean of information down to about a gigabyte per second, retaining only the most interesting bits. The data is then shared via a global computer grid for more than 8,000 physicists around the world to access and analyze.

It is common for large international projects to have data repositories dedicated to sharing their data. In addition to the LHC, other large-scale collaborations such as the Large Interferometer Gravitational-Wave Observatory as well as many national laboratories around the U.S. all have centrally organized and dedicated servers that openly share their data with scientists around the world.

“The government sees data as the product of the research they are funding, and in a sense, that's what they're buying,” said Meyers. “If they choose to fund millions of dollars for an experiment -- even if the scientists end up not finding anything, they damn well are going to make sure to have the data, right?” (His question was rhetorical.)

However, for smaller research efforts -- for example, a graduate student working at a university who made a relatively uninteresting material that did not exhibit certain properties as expected -- the data is less likely to be made known to other researchers. (That graduate student was me, and probably every graduate student ever.)

Certain research fields, such as materials science, are more decentralized by their nature, compared to fields that rely more heavily on large-scale collaborations, such as high energy particle physics or dark matter research -- think the invention of the lightbulb versus the LHC. The smaller null discoveries -- such as how beard hair doesn’t make very good lightbulb filaments -- are less costly, but can add up if the same mistakes are repeated many times over by everyone working in the same field. Scientists debate if there are better ways for these fields to share results that don’t meet the bar to be published in a scientific journal.

Going forward with carrots or sticks?

“Research grants are trying to develop initiatives for open data, usually by either requiring or giving incentives for the people who get the grant money to take that extra step and make their findings available,” said David Dror, a reference librarian at the University of Illinois at Chicago.

However, just demanding that scientists share their data may not be enough and can even be counterproductive if not done correctly.

“Very few experiments, especially in high energy physics, would publish raw data. And there is a good reason for that. Uncalibrated raw data is oftentimes useless and can be misleading. For people who are not involved with the experiment to analyze the raw data, in a lot of cases it won’t be very useful,” said Meyers.

Dror agrees. “We can have researchers just submit their data to some repository, but it won’t actually do any good unless they give enough information such that other scientists can tell what that data is about,” he said.

A stricter requirement for grantees to process and share all their data would also add to the existing logistical costs for scientists to do research. Researchers nowadays, especially university professors, already spend plenty of time performing duties not directly related to research, such as grant writing and teaching.

There is also the issue of incentives. Tenure at many research universities is granted based on an evaluation process that relies heavily on other researchers citing your work. Ratakonda, the researcher from IBM, thinks this reliance on publication citations when considering tenure contributes to the lack of motivation for researchers to share their unpublished data.

Blockchain, a method for distributing information with a decentralized approach best known by its application in cryptocurrencies such as bitcoin, may serve as a potential solution for researchers to share their data while keeping track of the ownership and merit beyond the current publication and citation model, especially for research efforts that lack a centralized organizing body like the LHC or LIGO.

“[Blockchain] can really truly decentralize data collection -- who does the auditing, who does the curation and who does the analysis -- all of this can be different parties, and they can come together in a trusted way. That’s really, I think, the benefit here,” said Ratakonda.

“People need to feel like it's worth sharing that data, and a lot of it is the culture, of what people feel is worth sharing,” said Dror. “Because ultimately, it still depends on the person who gathered the data to take it upon themselves to put the data out there.”

Epilogue: Standing on the shoulders of null results

Of all the avenues of scientific inquiry, dark matter research might be the one that best showcases the struggle to understand the value of null results.

“You can say that the entire history of dark matter research has been nothing but negative results, which makes it a little unusual,” said Meyers.

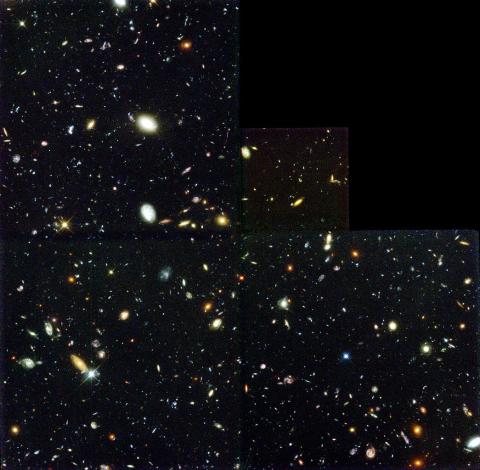

The field can be traced back to a series of discoveries in the 1960s and ’70s. Most notably, the astronomer Vera Rubin, who passed away in 2016, observed that the rotational motion of galaxies cannot be adequately explained by the known laws of physics. This prompted the theory that ordinary matter does not make up most of the mass in our universe, and there must exist another form of matter unknown to science. Another possible explanation is that there is something wrong with our understanding of gravity.

Today, almost half a century after Rubin discovered the galactic anomaly, physicists and astronomers are still in the process of testing and eliminating theories that propose explanations and ways to confirm or refute them. Although many had advocated for Rubin to be honored with a Nobel Prize before she passed away, the lack of a definitive detection of dark matter may have hurt her case.

“The thing about ravens, you can find them pretty easily -- you can just grab a raven and check what color it is, but the situation might be different with dark matter,” said Clarke.

“I'm wildly speculating here -- I am a philosopher, not a physicist -- but I’m guessing that it's not like we can just grab some dark matter and see what it looks like. It may be that the best we can do is to look all over the place everywhere and in every way possible.”

If we ever do find the elusive material, the scientists who get the glory will have built their success on the foundations laid by all those who found nothing.