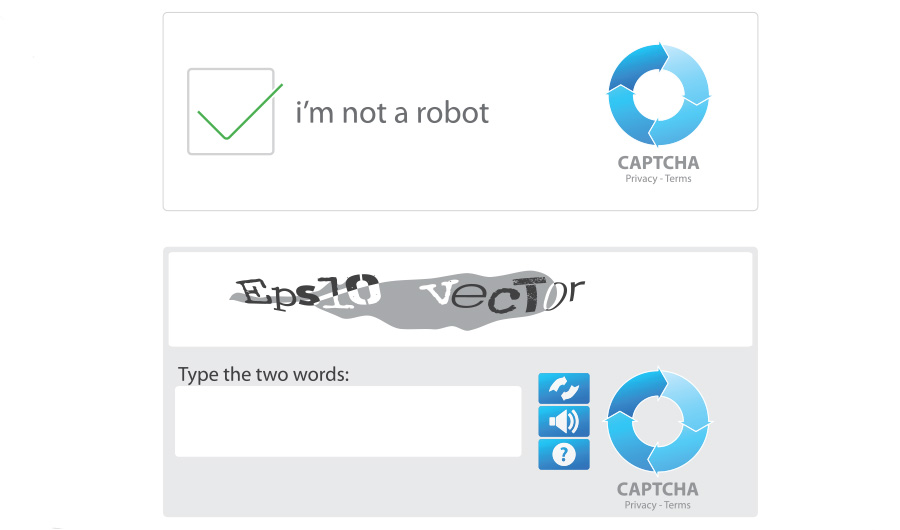

BRIEF: Robots Break Popular ‘I Am Not a Robot’ Tests

Image credits: metrue via Shutterstock

(Inside Science) -- Humans, it turns out you’re not as special as you think. A new computer model can efficiently identify the warped text in visual puzzles called CAPTCHAs. That's right, robots can now decipher some "I am not a robot" tests.

First invented in the late 1990s, “Completely Automated Public Turing Test(s) To Tell Computers and Humans Apart,” or CAPTCHAs, protect websites against automated software programs trying to register for new accounts, rig online polls, scrape email addresses and spam message boards.

Text-based CAPTCHAs thwart the bots by squishing and twisting letters, drawing lines through them or otherwise constructing a distorted alphabet soup that must be deciphered. Humans, most of the time, rise to the challenge. Computers are typically stumped.

But now a team of researchers from Vicarious, an artificial intelligence company based in San Francisco, has developed an efficient way to give machines near the same accuracy as humans on these text-based puzzles.

Inspired in part by the way the visual cortex of mammals works, the new model solved a variety of text-based CAPTCHAs with an accuracy of above 50 percent. For example, it solved a Google-owned system called reCAPTCHAs with an accuracy rate of 66.6 percent. Human accuracy, in comparison, is 87.4 percent.

Compared to other state-of-the-art machine learning models, the new system required a significantly smaller amount of training data to crack the CAPTCHAs, and was more robust when faced with small changes in character spacing.

The “Official CAPTCHA Site” declares the puzzles a “win-win situation.” If a CAPTCHA is broken, an AI problem is solved, it states.

Internet sites may be less sanguine if the new model results in a flood of bot attacks. The Vicarious researchers offer a straightforward solution: “Websites should move to more robust mechanisms for blocking bots,” they write in their paper, published today in Science. In other words, humans -- you need to up your game.